资料内容:

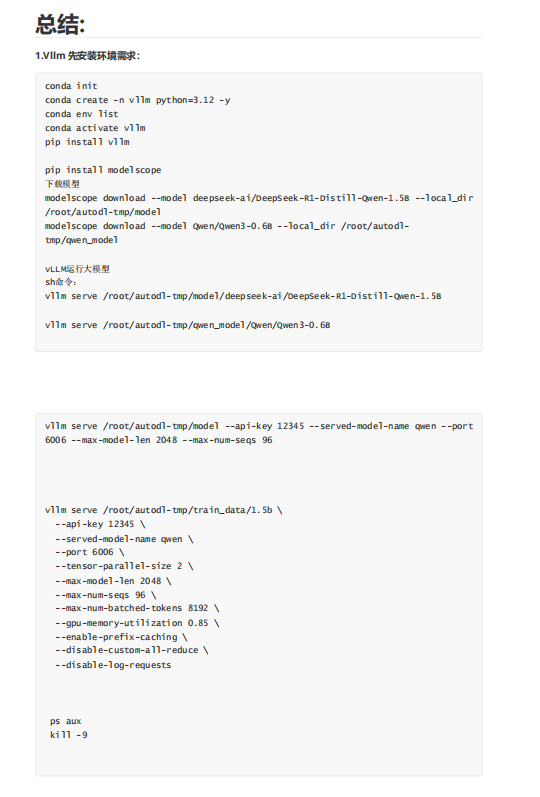

1.Vllm 先安装环境需求:

conda init

conda create -n vllm python=3.12 -y

conda env list

conda activate vllm

pip install vllm

pip install modelscope

下载模型

modelscope download --model deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B --local_dir

/root/autodl-tmp/model

modelscope download --model Qwen/Qwen3-0.6B --local_dir /root/autodltmp/qwen_model

vLLM运行大模型

sh命令:

vllm serve /root/autodl-tmp/model/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

vllm serve /root/autodl-tmp/qwen_model/Qwen/Qwen3-0.6B

vllm serve /root/autodl-tmp/model --api-key 12345 --served-model-name qwen --port

6006 --max-model-len 2048 --max-num-seqs 96

vllm serve /root/autodl-tmp/train_data/1.5b \

--api-key 12345 \

--served-model-name qwen \

--port 6006 \

--tensor-parallel-size 2 \

--max-model-len 2048 \

--max-num-seqs 96 \

--max-num-batched-tokens 8192 \

--gpu-memory-utilization 0.85 \

--enable-prefix-caching \

--disable-custom-all-reduce \

--disable-log-requests

ps aux

kill -9